One of my favorite things in the world is a thought provoking book, and there's not much I enjoy doing more on a lazy Saturday than wandering the aisles of books at the second-hand stores.

There are so many gems to be found, a gem like a coffee table book about the effects house Digital Domain from 22 years ago, filled with stories about how they accomplished the visual effects for some of history's biggest movies like Apollo 13 and Titanic.

For the first time in the years since I found it, I was going through my collection to pick a couple fresh for the living room. As I was leafing through the amazing pictures of physical models and CGI rigging throughout, I found myself caught by the text of the introduction.

It addressed a concern I see circulating through many industries, centered around generative AI:

One of the greatest misconceptions about modern movies is that visual effects are generated by computers. Nothing could be further from the truth. Human inventiveness is the most important ingredient and it always will be. Computers offer amazing new possibilities, but the underlying challenges of movie illusions are the same today as they were nearly a century ago when the industry was young.

People, not machines, drive the craft of visual effects.

Prescient from so long ago, and I strongly believe will be shown to be the case with generative AI as well. Some of the dissonance we see in dev experience – through the fuzziness of hype – around AI-assisted coding seems to be correlated with the code's target problem space.

Sure, AI can spit out specializations to code that's been written countless times, though even in these cases it's often arguably not that skilled when measured by an experienced dev.

Though, the space I've had the most problems using AI to code is when trying to create things that don't exist yet, where I've found it counterproductive to even open the GPT interface.

Because I spend most of my time in this realm of de novo creation, I find reading code and docs is still exceptionally more productive than GPT when attempting to understand how a library or system works.

Augmenting Reality

To get a grip on how much genAI issues can impact your ability to create, I did an experiment recently. I attempted to use chatGPT to help in comprehending the machinations that happen beneath and between kubernetes and the container runtime, and with the planning aspect of implementing a new rootfs handling feature in containerd.

I spent ~2 hours a day for 4 days doing research and dev planning with just GPT4 and my notes, then on the 5th day I began reading containerd source and docs.

Wow, after 2 hours in that source and architechtural documentation I basically had to throw away the previous 8h of "work".

The thing that scared me most about this experiment was that by the time I started feeding my mind from the source of actual truth, it was already so filled with GPT hallucinations that unraveling the lies and misconceptions carried a surprising cost in time and mental stamina.

The work I spent rooting GPT's lies out of my mind could have instead been spent actually learning, analyzing, and ultimately creating things instead.

Gazing back upon a GPT-Tinged Memory

Now, the saturation aspect of this experiment was intentional and, I think, quite enlightening.

I wanted to produce as stark a juxtoposition as possible between the million hallucinated papercuts inflicted without respite to truth, in a domain where I have significant experience, but an area I didn't have deep knowledge in specifics.

I wanted to subsequently lean on my skill in the domain to then become an expert in the specifics of the area, and do a comparative analysis between what I'd learned from a source of truth versus what I learned via reality filtered through the world's most advanced GPT.

Most of the kubernetes world is replete in golang code and is organized in certain patterns like control loops and eventual consistency. These are areas I spend a lot of time not just studying, but also implementing, so it lines up well with a stack I felt I could rapidly ingest.

In the end, I was actually pretty shocked by the magnitude of difference in what I thought I knew after working with GPT and how I learned the system worked in actuality.

But what I might have been even more shocked by is the difference in the richness of the real world compared to the world I perceived through GPT4; perhaps it's not a stretch to say that humans are many times more interesting than GPTs think we are.

Alan Turing proved the infinite complexity of even his simple machines via analysis of the halting problem. This is the infinite space in which we ask our genAI to operate when the space in which they are taught is not that of understanding, but only of concept mapping.

My AI is a Narcissist

It is pretty difficult for most people to simultaneously hold a fact in two conflicting states in their mind in a way that makes it easy for them to quickly swap things they learned with something corrected, especially as a rule now more than an exception.

Even after you learn the real truth it can sometimes be difficult to collapse the dissonance wave, second guessing yourself when going back to remember. In fact, this may even incentivize one to implicitly trust in order to avoid the dissonance altogether, praying that the follow-on consequences are minimal.

I guess one thing that's left to be seen is whether this is an additive debuff, but one thing is for sure: people attempting to use GPT to create things in domains where they lack practical experience are doomed to wander in a fog that they're confidently blind to.

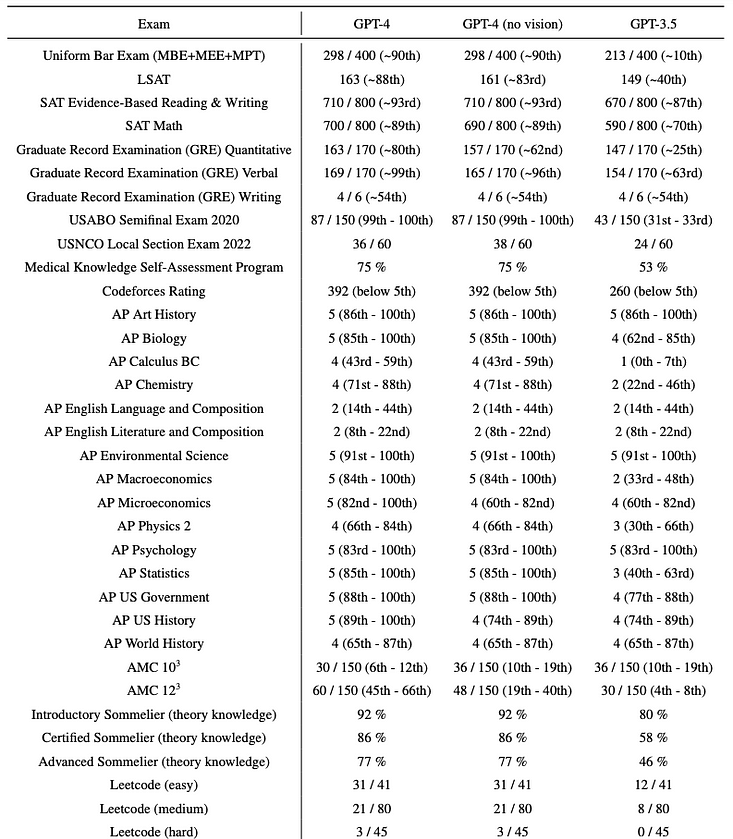

Looking at the recent GPT-4 Technical Report from March '24, it's interesting to note the things that GPT is "good" at. Sure it passes the Bar Exam, but law is probably one of the strictures most described in prose of human language. Look at where it struggles though and you can see it's in areas that require analysis, consideration, and inventiveness.

A confounder that we will shortly need to reckon with is Goodhart's law; it stands to reason that these measures will become less reliable when coverage of the AI's travails with them become published as analysis and data that it is then eventually trained upon.

Fin

I will talk about how I think genAI can never really root a creative work outside of human ingenuity in a followup post. As perhaps a thought-provoking hint, that post's title will be "You can't Factor Creation".