One place where I've been really unhappy with the UX of modern container-based SDLC pipelines is the creation of the images themselves. Docker was a disruptor, they were first to bring a (mostly) effective UX to the concept of "containers". But it hasn't kept up. The dockerfile methodology has always felt halfway between cache-efficient and delivery-efficient, ending up not being particularly efficient at either.

One of the most glaring compromises I ran into while testing how the content-based layer IDs work in docker, is that just the act of building an image causes the mtimes on the files in the container image to be set to the current build-time: this effectively causes every layer in the build to be impure, rendering the hash useless as a measurement.

At the end of the day it works, and to management shipping is really one of the only reliable SDLC metrics most invest in understanding. But security shifts evermore left as upper management is forced--ostensibly by laws created in the face of actual breaches--to address issues like supply-chain attacks and with a dearth of software security professionals at their disposal, pushing even more weight on having tight control of provenance-style concerns onto the developers.

I've spent a lot of time thinking about how the complexity of the developer UX impacts their ability to build sound solutions in the kubernetes infra space and, empirically, it seems that most devs don't know, and in most cases will probably never have the time to become an expert in, building full-stack (e.g. down to configuring netpol and HPA). This is where the ops part of devops usually starts to gel out as an individual specialization in a lot of orgs.

Similarly, a developer doing yarn install does not have the expertise to build secure infrastructure around ensuring the deps in their yarn.lock file, or the FROM in their dockerfile, have not been compromised. And these are just the security-critical aspects. The dockerfile method of building images has many other landmines that are not security critical but are huge productivity killers (cref: caching) and are very difficult to handle properly for a single image let alone a whole org.

Case Study

# escape=`

# Use the latest Windows Server Core image with .NET Framework 4.8.

FROM mcr.microsoft.com/dotnet/framework/sdk:4.8-windowsservercore-ltsc2019

# Restore the default Windows shell for correct batch processing.

SHELL ["cmd", "/S", "/C"]

# Use temp dir for environment setup

WORKDIR C:\TEMP

# Download the Build Tools bootstrapper.

ADD https://aka.ms/vs/16/release/vs_buildtools.exe vs_buildtools.exe

# Install Build Tools with msvc excluding workloads and components with known issues.

RUN vs_buildtools.exe --quiet --wait --norestart --nocache `

--installPath C:\BuildTools `

--add Microsoft.VisualStudio.Workload.VCTools --includeRecommended `

--add Microsoft.VisualStudio.Component.VC.ATL `

--remove Microsoft.VisualStudio.Component.Windows10SDK.10240 `

--remove Microsoft.VisualStudio.Component.Windows10SDK.10586 `

--remove Microsoft.VisualStudio.Component.Windows10SDK.14393 `

--remove Microsoft.VisualStudio.Component.Windows81SDK `

|| IF "%ERRORLEVEL%"=="3010" EXIT 0

#ENV chocolateyVersion 0.10.3

ENV ChocolateyUseWindowsCompression false

# Set your PowerShell execution policy

RUN powershell Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Force

# Install Chocolatey

RUN powershell -NoProfile -ExecutionPolicy Bypass -Command "iex ((New-Object System.Net.WebClient).DownloadString('https://chocolatey.org/install.ps1'))" && SET "PATH=%PATH%;%ALLUSERSPROFILE%\chocolatey\bin"

# Install Chocolatey packages

RUN choco install git.install bzip2 -y && choco install cmake --version 3.17.2 --installargs 'ADD_CMAKE_TO_PATH=System' -y

# Copy dependency setup script into container

COPY .ci\depsetup.ps1 depsetup.ps1

# Downloaded dependency versions

ENV MATHFU_VERSION master

ENV CEF_VERSION cef_binary_81.3.10+gb223419+chromium-81.0.4044.138_windows64

# Install dependencies

RUN powershell.exe -NoLogo -ExecutionPolicy Bypass .\depsetup.ps1

# Change workdir to build workspace

WORKDIR C:\workspace

# Define the entry point for the docker container.

# This entry point starts the developer command prompt and launches the PowerShell shell.

ENTRYPOINT ["C:\\BuildTools\\Common7\\Tools\\VsDevCmd.bat", "&&", "powershell.exe", "-NoLogo", "-ExecutionPolicy", "Bypass"]######

# Install project dependencies

######

echo "MATHFU_VERSION: $Env:MATHFU_VERSION"

echo "CEF_VERSION: $Env:CEF_VERSION"

Push-Location $Env:USERPROFILE

# Get mathfu

git clone --recursive https://github.com/joshperry/mathfu.git

Push-Location mathfu

git checkout "$Env:MATHFU_VERSION"

Pop-Location

setx MATHFU_ROOT "$Env:USERPROFILE\mathfu"

# Get cef

$CEF_VERSION_ENC=[uri]::EscapeDataString($Env:CEF_VERSION)

Invoke-FastWebRequest -URI "http://opensource.spotify.com/cefbuilds/$CEF_VERSION_ENC.tar.bz2" -OutFile "$Env:USERPROFILE\$Env:CEF_VERSION.tar.bz2"

bunzip2 -d "$Env:CEF_VERSION.tar.bz2"

tar xf "$Env:CEF_VERSION.tar"

Remove-Item "$Env:CEF_VERSION.tar" -Confirm:$false

setx CEF_ROOT "$Env:USERPROFILE\$Env:CEF_VERSION"

Pop-Location# Generate visual studio sln

.\gen_vs2019.bat cibuild

# Execute the build

Push-Location cibuild

cmake --build . --config Release

Pop-LocationThat's a lot of ish to just drop on you, I know, but it's important to the narrative I'm building and isn't really important to understand.

This is the containerized build setup for a VR project I've been working on. I know it's a lot of windowese (heck, I don't even have powershell syntax highlighting on the site), but though the tooling differs, it mirrors mostly the same process on other OSs like Linux.

This is fresh in my mind because I've been working on moving this project over to Linux at the same time that I happened to be trying to do a nix+nixos immersion. I am going to do a comparison, it feels to me as stark a shift as the move from VMs to containers+k8s. Keep in mind as well that the above also leaves out the manual process of building zmq libs ahead of time as well as vendored deps that I used to check into the repo (just never got around to automating it, it's a pain).

{

description = "A flake for building ovrly";

inputs.nixpkgs.url = "github:nixos/nixpkgs/nixos-22.11";

inputs.cef.url = "https://cef-builds.spotifycdn.com/cef_binary_111.2.7+gebf5d6a+chromium-111.0.5563.148_linux64.tar.bz2";

inputs.cef.flake = false;

inputs.mathfu.url = "git+https://github.com/google/mathfu.git?submodules=1";

inputs.mathfu.flake = false;

inputs.openvr.url = "github:ValveSoftware/openvr";

inputs.openvr.flake = false;

inputs.flake-utils.url = "github:numtide/flake-utils";

inputs.nixgl.url = "github:guibou/nixGL";

inputs.nixgl.inputs.nixpkgs.follows = "nixpkgs";

outputs = { self, nixpkgs, cef, mathfu, openvr, flake-utils, nixgl }:

flake-utils.lib.eachSystem [ "x86_64-linux" ] (system:

let

pkgs = import nixpkgs { inherit system; overlays=[ nixgl.overlay ]; };

deps = with pkgs; [

# cef/chromium deps

alsa-lib atk cairo cups dbus expat glib libdrm libva libxkbcommon mesa nspr nss pango

xorg.libX11 xorg.libxcb xorg.libXcomposite xorg.libXcursor xorg.libXdamage

xorg.libXext xorg.libXfixes xorg.libXi xorg.libXinerama xorg.libXrandr

# ovrly deps

cppzmq fmt_8 glfw nlohmann_json spdlog zeromq

];

ovrlyBuild = pkgs.stdenv.mkDerivation {

name = "ovrly";

src = self;

nativeBuildInputs = with pkgs; [

cmake

ninja

autoPatchelfHook

];

buildInputs = deps;

FONTCONFIG_FILE = pkgs.makeFontsConf {

fontDirectories = [ pkgs.freefont_ttf ];

};

cmakeFlags = [

"-DCEF_ROOT=${cef}"

"-DMATHFU_DIR=${mathfu}"

"-DOPENVR_DIR=${openvr}"

"-DPROJECT_ARCH=x86_64"

"-DCMAKE_CXX_STANDARD=20"

];

};

dockerImage = pkgs.dockerTools.buildImage {

name = "ovrly";

config =

let

FONTCONFIG_FILE = pkgs.makeFontsConf {

fontDirectories = [ pkgs.freefont_ttf ];

};

in

{

Cmd = [ "${ovrlyBuild}/bin/ovrly" ];

Env = [

"FONTCONFIG_FILE=${FONTCONFIG_FILE}"

];

};

};

in {

packages = {

ovrly = ovrlyBuild;

docker = dockerImage;

};

defaultPackage = ovrlyBuild;

devShell = pkgs.mkShell {

nativeBuildInputs = [ pkgs.cmake ];

buildInputs = deps;

# Exports as env-vars so we can find these paths in-shell

CEF_ROOT=cef;

MATHFU_DIR=mathfu;

OPENVR_DIR=openvr;

cmakeFlags = [

"-DCMAKE_BUILD_TYPE=Debug"

"-DCEF_ROOT=${cef}"

"-DMATHFU_DIR=${mathfu}"

"-DOPENVR_DIR=${openvr}"

"-DPROJECT_ARCH=x86_64"

"-DCMAKE_VERBOSE_MAKEFILE:BOOL=ON"

"-DCMAKE_CXX_STANDARD=20"

];

};

}

);

}I notably also do not have syntax highlighting for nix (at time of writing).

I do want to talk about the nix language for a couple paragraphs, but without even understanding the above I want to explain some highlights in my mind.

Besides replacing all of the above scripts (which may have been Makefile+bash on Linux), this provides the following features:

- hermetic builds: flake.lock, custom canonicalizing archive format (nar), all output file times set to epoch+1, $HOME == "/homeless-shelter", etc.

- build-time deps: a number of deps are already in the nix store, some already compiled; for the rest, using flake inputs basically just puts them in a folder for you and gives you the path (amazing). I was able to move all dep handling to the flake in 3 or 4 iterations.

- runtime deps: after the build, the build scripts will find all files referenced from the store by any of the outputs (false positives really can't happen because of hashes in the paths). It considers these the runtime deps and automatically sets up the dependency tree for them!

- container image: this can build a container image

nix build .#docker, including all the deps, WITHOUT DOCKER! It links a tar.gz of the layer stack (in docker save format) as./result, usedocker load < ./resultto get it into your docker image store. I'll talk more about this, but this is close to #1 wow. - nix develop: the

devShellderivation is realized when you runnix developin the same dir as the flake. This puts you into a nix shell with all the deps and tooling in the path. All of the tooling exposed bymkDerivationfor its automatic build process are also available: runcmakeConfigurePhaseand it will create./build, enter it, and use yourCMakeLists.txtand cmake to fill it with either a makefile or ninja project ready to build. RunningbuildPhasein the shell will actually build the project. The ease of iteration and the amount of data and configurability available here is really outstanding. - nix build: Running this will build the flake and link

./resultto the completed build output folder in the nix store. In this case,./result/bin/ovrlywill run the OpenGL VR application. - content-addressed: because of the hermetic builds we get support for creating a fully content-addressed provenance stack. We can prove where every single component came from in every build, down to the bytes of the source code. :googly-eyes:

All of this together, the nix language itself notwithstanding, is honestly pretty mindbending for me. Even just typing this out for the first time makes it hit harder than the impact alone of actually using it to solve a problem.

Bridging the Divide

The building of a docker image is no small thing. I have to say that it is something that we were struggling to solve at my last dayjob. We had a pretty great CI/CD system using gitlab+gitops+k8s to keep applications moving out to prod with pretty high frequency. In the shadow of supply-chain attacks like solarwinds (our network guys are pros and they had our instance well isolated) we were very cognizant of our need to address our code provenance.

We had begun scanning all containers as they landed in our registry and used that tooling to generate software BOMs as well as threat scores. What we were still in the planning phases on was the nonrepudiation and triagability of the layers in our docker stacks.

All of our projects were using FROM to reference images directly upstream from dockerhub et al. This setup is ripe for zeroday supply-chain attacks on the registry that your scanner won't pop on and is exactly why we were working to address it.

Our plan of attack at that point was to create blessed base images that projects could derive FROM. The devops/security team would be in charge of providing these images which sould most likely also share a common base, through one or more layers. This would give us a single-point of touch to address issues in all downstream projects.

We could flag base image builds in our scanner (also affecting their ability to run in prod) and force the CD pipeline to do a new build with the fixed base immediately, including automated deployment to production.

We already had a number of package and project-level prophylactics for attacks on registries like ruby gems or pip, but these require complex deployments that understand applications syntactically at the deployment level. This is one place where containers excel in assisting in final package measurements.

This left on the table one big elephant to swallow: integration of our CI and devops systems. At the time we were still running all of our build machines as either VMs or, increasingly, bare metal. We already had a bunch of baremetal k8s nodes, we had a dictum to run prod builds in our secure prod environment, and we wanted to consolidate our runtime infrastructure managment under k8s.

This is a direction we were heading, with docker image builds causing quite a roadblock. Because building docker images requires mounting filesystems to create and manipulate the overlays, it necessarily needs to run as root. This is ostensibly the job of the docker daemon, though giving a process access to the daemon also gives them easy escalation to run arbitrary code as root.

Kubernetes is moving towards a user-namespaced future, but we're not there yet. We couldn't allow build scripts that run on arbitrary commits have access to the docker daemon on the hosts executing production workloads. There are other solutions, like a separate cluster, affinity, running microVMs, podman, buildah and the like, but none are great solutions particularly in the face of the dockerfile method's poor approach to provenance.

If we're going to rewrite the build system to better... support provenance, I don't think it's out of the question to look at solutions on the fringe of the space to see what's available to salve what ails us.

A New Standard?

Nix itself is in some ways unapproachable, the documentation lags and has holes, the shift to flakes muddies the future, and functional programming isn't well understood even in the ranks of the general dev pool. It's not a silver bullet by any means, but it has even more power than I let on here.

If you're familiar with nix then you'll know how the option system works, and how the different phases of the process of making the derivation and then realizing it jive. For those unfamiliar, I'd like to put my own color on something that I see as a lynchpin.

You have not only the ability to define a language for building, packaging, and deploying your application with strong provenance, but you also have the ability to define a language for configuring the application. That configuration can then additionally play an imporant part of the provenance of the total application and its deployment.

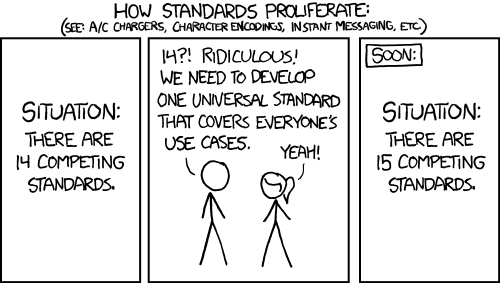

Something that tumbles in my mind any time I'm slogging through editing one of the millions of config files on a Linux system (whether directly or through a change management system like puppet or ansible) is "man we really need a standard for application configuration". And then in the next beat an image of the apropos always-relevant xkcd pops into my head.

While this may be yet another standard, another abstraction, in the pursuit of the one to bind them all. I feel like the drip this capability puts on top of everything I've laid out above really sells it.

This is already beginning to become quite winded and without a proper primer on nix it would be difficult to go much deeper into how this shifts things. But I do want to end with a quick look at where we might go from here with an eye towards devX.

When I let my imagination run a bit, it immediately bears much fruit. I'll share one such line and leave the others for future discussion and your own rumination.

We can build binaries with their deps, package dynamic languages with theirs, define derivations that instantly gives us a well-defined dev environment, why can't we have a derivation that builds the k8s deployment manifests? Well, great minds usually think alike, and I'm by no means an early adopter of the ways of nix: https://github.com/hall/kubenix.

I know many decrying their newfound status as a yaml jockey, I could see any number of lispers or schemers that would jump at the chance to throw functions at the problem than tabs (or was it spaces...)